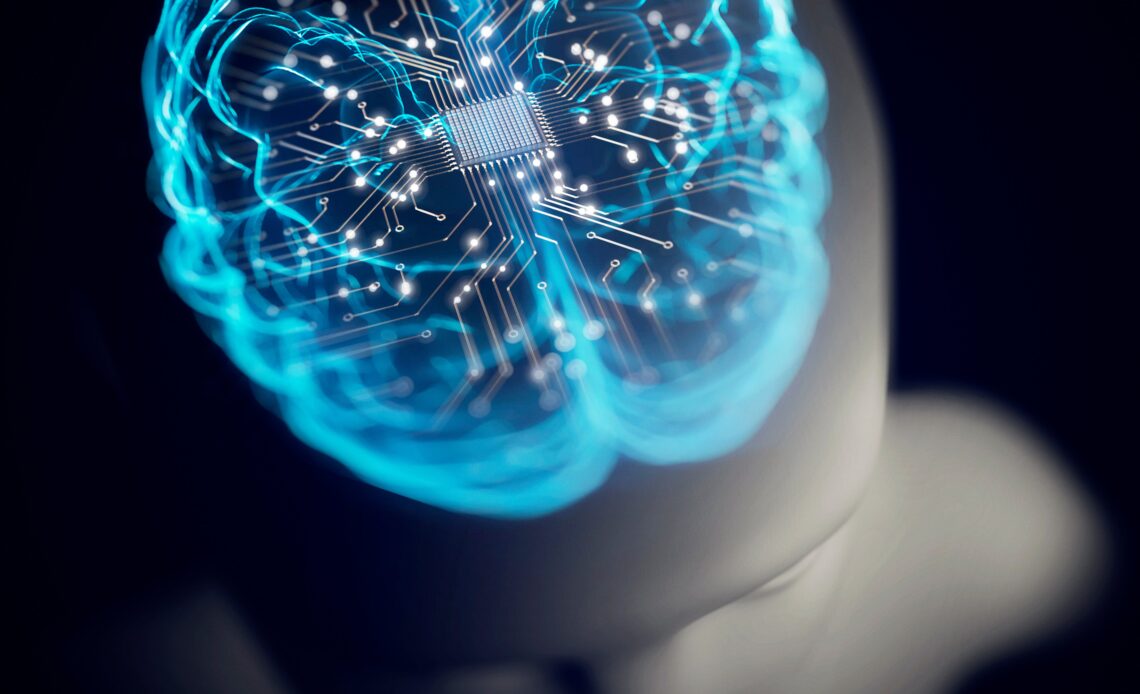

Artificial intelligence and human thought both run on electricity, but that’s about where the physical similarities end. AI’s output arises from silicon and metal circuitry; human cognition arises from a mass of living tissue. And the architectures of these systems are fundamentally different, too. Conventional computers store and compute information in distinct parts of the hardware, shuttling data back and forth between memory and microprocessor. The human brain, on the other hand, entangles memory with processing, helping to make it more efficient.

Computers’ relative inefficiency makes running AI models extremely costly. Data centers, where computing machines and hardware are stored, account for 1 to 1.5 percent of global electricity use, and by 2027, new AI server units could consume at least 85.4 terrawatt-hours annually, or more than many small countries use every year. The brain is “way more efficient,” says Mark Hersam, a chemist and engineer at Northwestern University. “We’ve been trying for years to come up with devices and materials that can better mimic how the brain does computation,” called neuromorphic computer systems.

Now Hersam and his colleagues have taken a crucial early step toward this goal by redesigning one of electronic circuitry’s most fundamental building blocks, the transistor, to function more like a neuron. Transistors are minuscule, switchlike devices that control and generate electrical signals—they are like the nerve cells of a computer chip and underlie nearly all modern electronics. The new type of transistor, called a moiré synaptic transistor, integrates memory with processing to use less energy. As described in a study in Nature last December, these brainlike circuits improve energy efficiency and allow AI systems to move beyond simple pattern recognition and into more brainlike decision-making.

On supporting science journalism

If you’re enjoying this article, consider supporting our award-winning journalism by subscribing. By purchasing a subscription you are helping to ensure the future of impactful stories about the discoveries and ideas shaping our world today.

To incorporate memory directly into how transistors function, Hersam’s team turned to two-dimensional materials with remarkably thin arrangements of atoms that, when layered on top of one another at different orientations, form mesmerizing, kaleidoscopelike patterns called moiré superstructures. When an electrical current is applied…

Click Here to Read the Full Original Article at Scientific American Content: Global…